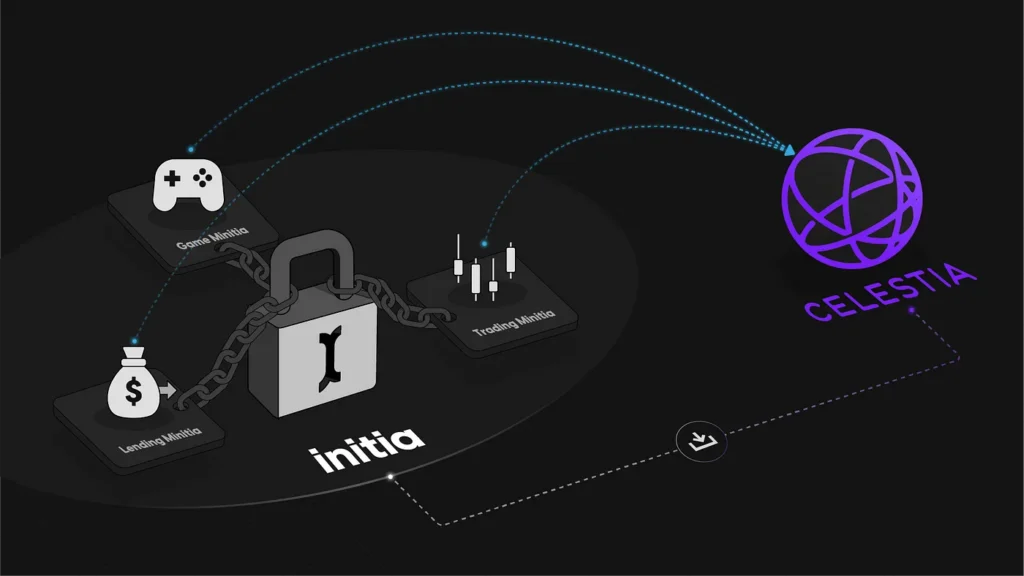

Initia, which we already introduced on our blog, is a Layer 1 blockchain incorporating Layer 2 chains (Minitias) to support a network of interconnected, modular sub-networks. Therefore, enhancing scalability, efficiency, and flexibility within the ecosystem termed ‘Omnitia’. The harmony between the L1 and L2 is achieved through an Initia-specific technology stack that allows developers to implement chain-level mechanisms that align the economic interests of users and developers across the ecosystem. Effectively, Initia architecturally embeds specific L2s into the foundational L1, which is why it is described as a network for interwoven rollups.

Read more from our Initia series:

Initia operates within the dynamic Cosmos ecosystem, where emerging chains compete to strike a balance between security and scalability while offering distinctive functionalities to attract users. Such an environment fosters the development of innovative frameworks including Partial Set Security, as previously discussed on our blog. In this paper, we delve into a technical examination of Celestia’s Data Availability technology, a pivotal component of Omnitia Shared Security—a flexible yet robust security framework.

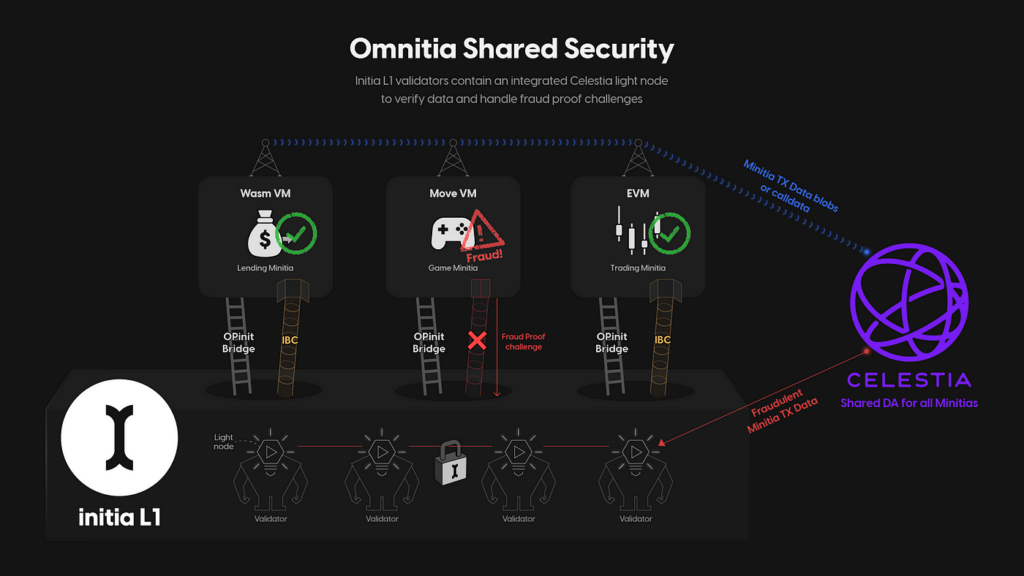

Omnitia Shared Security (OSS)

The Omnitia Shared Security (OSS) is a scalable security framework designed to safeguard assets across millions of Minitias that can be interwoven into Initia. In cases of fraud challenges on an L2, the L1 validator set is called upon to resolve the dispute. It is possible to call the L1 set to take care of L2 thanks to integrating Celestia light nodes within the validator nodes, which enables data verification across Minitias without downloading complete blocks. The reliance on the Initia Layer 1 for security and data settlement enables the integration of advanced rollup functionalities and the use of standard Cosmos SDK modules like AuthZ and Feegrant, as well as custom modules like the Skip Protocol’s Proof-of-Burn (POB).

Effectively, Minitias can send transaction data directly to Celestia’s nodes to determine the state of the aggregated chain and verify the accuracy of state transitions.

The Key Components of OSS include:

- Shared Data Availability Layer: Provides the Initia Layer 1 validator set, challengers, and bridge operators with access to the state data required to construct fraud proofs against invalid rollup operations.

- Direct Posting to Celestia: Allows Minitias to post transaction data directly to Celestia, enabling deterministic verification of the rollup chain’s state transitions.

- Data Verification: Utilises Celestia’s Namespaced Merkle Trees (NMT) and Data Availability Sampling (DAS), enabling relevant actors to download and verify only the necessary transactions, optimising resource usage

Below, we deep-dive into key components of OSS, including Celestia Light Nodes, the Data Availability Layer, and the Namespaced Merkle Tree, that ensure robustness and interoperability across Initia’s layered infrastructure.

Celestia Light Nodes

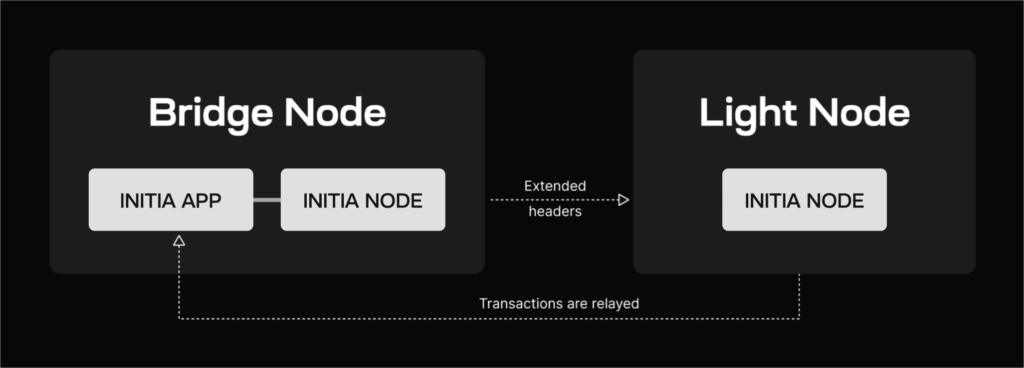

Celestia Light Node is a type of node that only downloads and verifies block headers and relies on an honest majority assumption that the state of the chain indicated by the block header is valid. Since light clients aren’t required to download and execute transactions like full nodes, they can run on considerably cheaper hardware, at the expense of weaker security guarantees.

However, it is important to mention that Celestia’s light nodes do not make an honest majority assumption for state validity because they can also verify the body of the block through data availability sampling (DAS), hence increasing security guarantees.

Effectively, the Celestia light node performs data availability sampling (DAS) without needing to store the entire blockchain. Here’s a detailed overview of this process:

Key Features and Functions of Celestia Light Nodes

- Data Availability Sampling (DAS): The primary function of a Celestia light node is to ensure that data referenced by the blockchain is available and can be retrieved. It does this by performing DAS, which involves randomly sampling pieces of the data to confirm its completeness and accessibility – a technology decomposed later in this piece.

- Listening for ExtendedHeaders: Light nodes receive ExtendedHeaders, which are enriched versions of raw block headers containing additional metadata related to data availability. These headers notify light nodes of new blocks and the associated data availability information.

- Reduced Resource Requirements: Unlike full nodes, light nodes do not store the entire blockchain. Instead, they only store block headers and perform DAS, therefore reducing the hardware and bandwidth requirements, and making it feasible to run light nodes on less powerful machines.

Use Cases

Due to efficiency and accessibility, Celestia light nodes are typically used in scenarios where full data storage is unnecessary or impractical, such as:

- Mobile and Lightweight Applications: Devices with limited resources, such as mobile phones or embedded systems, can still participate in the network and ensure data availability.

- Decentralised Applications (dApps): Developers can leverage light nodes to create decentralised applications that require verification of data availability without the overhead of full node operation.

Therefore, light nodes are perfectly positioned for Inita, a decentralised blockchain network that aims to be fully accessible through a mobile application. More specifically, by only storing headers and performing DAS, light nodes bring enough efficiency to significantly reduce storage and computational requirements, thus making it possible for Inita to potentially deploy its solution to the iPhone operating System (IOS). Since resource requirements for interaction are lowered, a broader range of users and devices can participate in the network, enhancing decentralisation and security.

However, broader usage necessitates scalability. To this end, light nodes contribute to the mass usage of Initia by distributing the workload of data availability verification across many lightweight participants.

In summary, Celestia light nodes play a vital role in ensuring data availability and therefore a secure functioning of the Initia blockchain network while maintaining low resource consumption, making the network accessible and scalable.

Data Availability Sampling (DAS)

Initia relies on Celstia’s Data Availability Sampling (DAS) technology, which is a mechanism for light nodes to verify data availability without having to download all data for a block. For a DAS to work, light nodes must conduct multiple rounds of random sampling for small portions of block data, which means that light nodes download only a small, random subset of the block data and use probabilistic guarantees to infer the availability of the entire block.

The more rounds of block data sampling are completed, the higher the confidence that data is available. Once the light node successfully reaches a predetermined confidence level (e.g. 99%) it will consider the block data as available.

When implemented in blockchain designs like Initia, data availability sampling enables light nodes to contribute to both the security and throughput of the network with significantly cheaper hardware than that of full nodes.

Data Availability Sampling Explained

Before data availability sampling starts, the available data is divided and encoded. After these refined data points are sampled, light nodes share the information for verification. Below we go through this process step-by-step.

Block Data and Encoding:

- Each block’s data is divided into smaller units called shares.

- These shares are arranged in a two-dimensional matrix

- The matrix is extended with parity data using a 2 Reed-Solomon encoding scheme to create an extended matrix (we explain this model later).

- Separate Merkle roots are calculated for each row and column of the extended matrix.

The overall block data commitment is the Merkle root of these row and column Merkle roots. Put differently, the ultimate cryptographic summary (or commitment) of all the data in a block is created by taking the Merkle roots of each row and column of the extended matrix (formed by the block’s data and parity data) and then combining them into a final Merkle root.

Data Availability Sampling:

- Light nodes randomly select unique coordinates within the extended matrix.

- They request the data shares and corresponding Merkle proofs from full nodes at these coordinates.

- If light nodes receive valid data shares and Merkle proofs, it provides a high probability that the entire block’s data is available.

If the probability threshold of data availability is met, nodes receive the green light to cross-share this information.

Gossiping Data Shares:

- Light nodes share the received data with other nodes in the network.

- As long as enough unique data shares are sampled and shared, honest full nodes can reconstruct the entire block data.

Overall, DAS allows Initia OSS (and therefore the whole Omnitia ecosystem) to scale its data availability layer efficiently because light nodes only need to sample a small portion of the block data. Moreover, as the number of light nodes increases, the collective ability to sample and verify block data grows, enabling support for larger blocks without burdening individual light nodes.

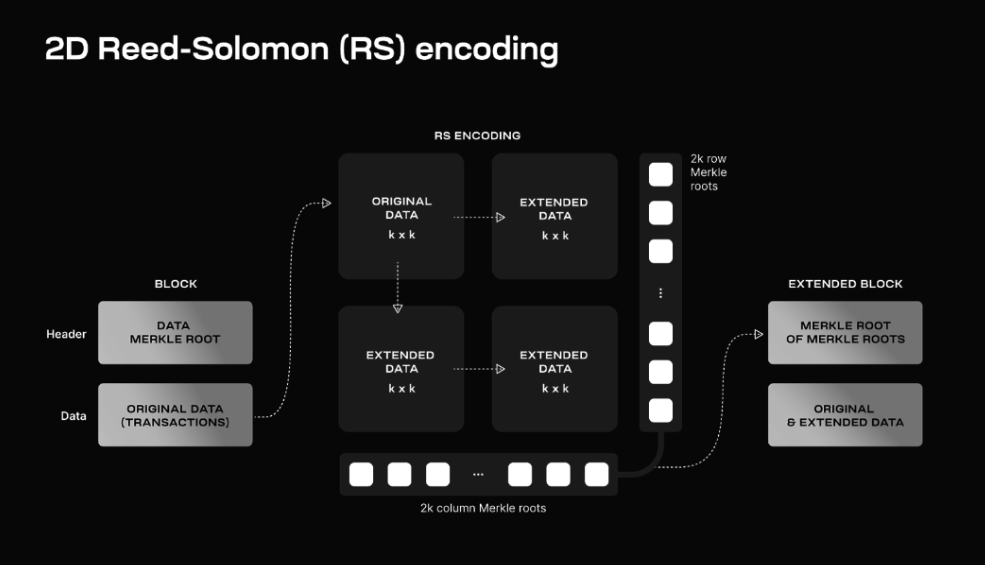

2-dimensional Reed-Solomon (RS) Encoding

2-dimensional Reed-Solomon Encoding (RS) is an essential part of DAS which is used to encode the smaller pieces of block data and acts as a fraud proof, ensuring that all parts of this data are available and correct. Fraud proofs are necessary to detect and reject blocks with incorrectly generated extended data, ensuring that only valid data gets accepted and processed by the network.

This is how the RS works:

- Splitting the Data: The large block of data is divided into smaller pieces, called shares.

- Creating a Matrix: These shares are arranged into a square grid, forming a K x K matrix.

- Adding Extra Pieces: To make the data more robust, extra pieces called parity data are added to extend the matrix into a larger 2K x 2K grid.

- Making Data Tamper-Proof: Separate checks (Merkle roots) are computed for each row and column of this extended matrix. A Merkle root is like a unique summary for a row or column of data.

- Creating a Master Check: These row and column Merkle roots are then combined into a single master Merkle root, which serves as the overall commitment to the data’s integrity. This master Merkle root is stored in the block header.

The method of adding extra pieces and creating checks ensures that even if some pieces of the data are missing or damaged, the system can still verify and recover the data. To ensure the data is still available and hasn’t been tampered with, light nodes check random pieces of the larger grid. If these pieces are correct, the data is confirmed to be intact.

In summary, the RS system helps keep the data safe, intact, and easy to check, ensuring the entire blockchain remains trustworthy and secure and is represented in the diagram below.

Namespaced Merkle Tree (NMT)

A Merkle tree, also known as a hash tree, is a fundamental data structure in blockchain technology, created to provide an efficient and secure way to verify the integrity and consistency of large data sets.

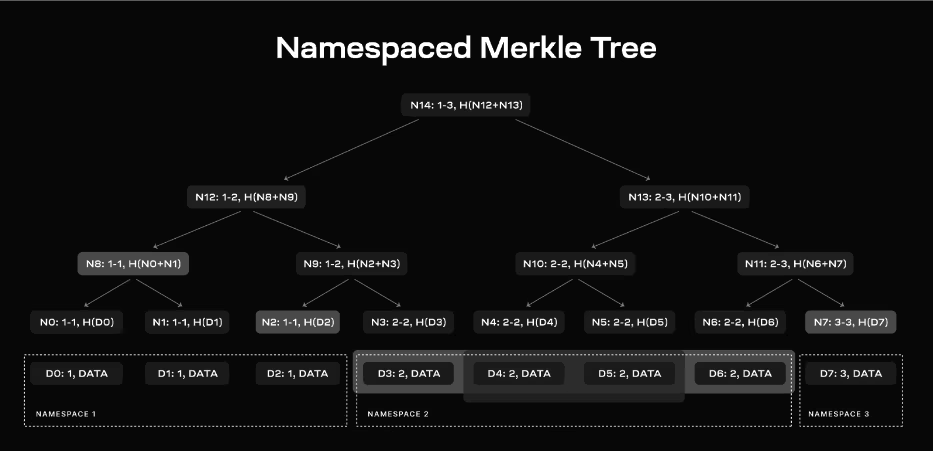

A Namespaced Merkle Tree (NMT) is a type of Merkle tree where each node in the tree is tagged by the minimum and maximum namespace of their children. The leaves in the tree are ordered by the namespace identifiers of the messages. Namespace identifiers are a product of Namespace partitioning – a process where block data is divided into multiple namespaces and each cosserpsonding namespace is attached to a specific application on Initia (such as Mintia rollups). Via namespaces, applications can download data relevant to their namespace, ignoring data from other namespaces. Additionally, Merkle proofs can be created that prove to a verifier that all elements of the tree of a specific namespace have been included.

NMT Data Flow:

Data Arrangement: First of all, Block data is partitioned into namespaces, each namespace containing a sequence of data shares.

Tree Construction: Following the data arrangement, leaves are arranged by namespace and Internal nodes are computed such that each node includes the hash of its children and the range of namespaces covered by those children.

Data Verification: After the tree is constructed, the data can be verified on-demand. When an application requests data for a specific namespace, it receives the relevant data shares and the necessary internal nodes as proofs. The application can use these proofs to verify that the data shares are part of the block data and that all data for the requested namespace has been provided.

For an analogy, NMT could be viewed as a well-organized library where each section (namespace) holds books (data shares) on a specific topic. Imagine each section of the library has its unique identifier, and the shelves are arranged in order, making it easy to find all books on a particular topic without searching the entire library.

How it works:

- Data Arrangement: Books are divided into sections based on their topic (namespace).

- Tree Construction: Each shelf (node) holds the identifiers of books in its section, creating a map of the entire library.

- Data Verification: When you request books on a specific topic, you get the list of books and their locations, ensuring you have all the books for that topic without unnecessary ones.

To put the above process into perspective, let’s consider a Namespaced Merkle Tree with 8 data shares divided into 3 namespaces:

- Namespace 1: Data shares D1, D2

- Namespace 2: Data shares D3, D4, D5, D6

- Namespace 3: Data shares D7, D8

The NMT would look like this:

*Nodes N12, N8, N9, etc., include the range of namespaces they cover.

If an application requests data for Namespace 2, it receives:

- Data shares: D3, D4, D5, D6

- Internal nodes: N8, N7 (and already has root N14 from the block header)

The application can then verify the data shares are part of the block data and check the namespace ranges in the provided nodes to ensure all data for Namespace 2 is included.

Overall, NMT efficiently retrieves data as only application-specific information can be retrieved, saving bandwidth and processing resources. Proof of Completeness is also ensured since applications can verify they have received all data for their namespace. Meanwhile, By partitioning data and using namespaces, the system can efficiently handle multiple applications and large volumes of data, hence underpinning scalability

Conclusion

In conclusion, Omnitia Shared Security (OSS) provides a robust, scalable security framework that leverages advanced technologies such as Celestia’s light nodes, Data Availability Sampling (DAS), 2-dimensional Reed-Solomon Encoding (RS), and Namespaced Merkle Trees (NMT). By integrating Celestia light nodes within Initia’s validator nodes, OSS enables efficient data verification across interconnected Layer 2 (L2) Minitias without the need to download complete blocks, thereby reducing resource consumption while maintaining high security guarantees. The use of DAS ensures that block data is available and verifiable with minimal hardware requirements, facilitating widespread participation and enhancing decentralisation. RS encoding adds an extra layer of data integrity and tamper-proofing, while NMTs enable efficient data retrieval and verification for application-specific namespaces. Collectively, these components enable OSS to not only support the scalability and flexibility of the Initia ecosystem but also uphold the security and reliability necessary for its widespread adoption and efficient operation.

Simply Stake and Secure your assets with the Ledger wallet.

Disclaimer: This article contains affiliate links. If you click on these links and make a purchase, we may receive a small commission at no additional cost to you. These commissions help support our work and allow us to continue providing valuable content. Thank you for your support!

This article is provided for informational purposes only and is not intended as investment advice. Investing in cryptocurrencies carries significant risks and is highly speculative. The opinions and analyses presented do not reflect the official stance of any company or entity. We strongly advise consulting with a qualified financial professional before making any investment decisions. The author and publisher assume no liability for any actions taken based on the content of this article. Always conduct your own due diligence before investing.